saving pcibex recordings to s3 with a python lambda

For a bit time I have been trying create my own server in my home using my old computer. But until now, I decided to use Amazon S3 to store participant recordings. Unfortunately, the official PCIbex guide is out of date. This post shows how to send PCIbex audio recordings directly to Amazon S3 using a Python AWS Lambda. It’s a modern replacement for the older JS/S3 guide from the official PCIbex website.

A few practical notes up front:

- This version uses Python and lets you control how the uploaded recordings are named and stored.

- AWS credits: Amazon often gives up to $200 in credits, which has been plenty for my dissertation-scale needs. Your university may also have a credits agreement, and you can independently apply for research credits using Amazon’s own portal.

- I’m not a fan of Amazon’s market power, but if you don’t have money for other services or a home server, AWS is a pragmatic choice.

- This walkthrough assumes you’re not self-hosting PCIbex; you’re using the main https://farm.pcibex.net site.

- Long term, I recommend learning the AWS CLI and doing most of this from the command line—the console UI is clunky and ever-changing. It is just that I still don’t know how to do that.

If the jargon feels like alphabet soup, ignore it for now. Roughly: S3 is a secure Dropbox; Lambda is a piece of code that runs when triggered; API Gateway is the doorbell that triggers the Lambda.

Ethics & compliance

Voice recordings are personally identifiable. Before collecting or storing them, talk to your institution’s security/compliance office (for me: UMD SPARCS). Describe what you collect, where it’s stored (S3), who can access it (IAM policies), and for how long.

The goal

- Call

InitiateRecorder(<your-url>)at the start of the experiment.

- Call

UploadRecordings(...)between trials or at the end.

- Your Lambda receives the uploaded ZIP and writes it to S3, then returns

{ "ok": true }.

No extra JavaScript in PCIbex, no PHP server.

Architecture (one screen)

- PCIbex runs in the participant’s browser and records audio.

- It calls your API Gateway URL.

- API Gateway triggers a Python Lambda.

- The Lambda accepts multipart/form-data from PCIbex and saves the file to S3.

- The Lambda responds

{ "ok": true }so PCIbex continues.

We’ll build this once and reuse it for future experiments.

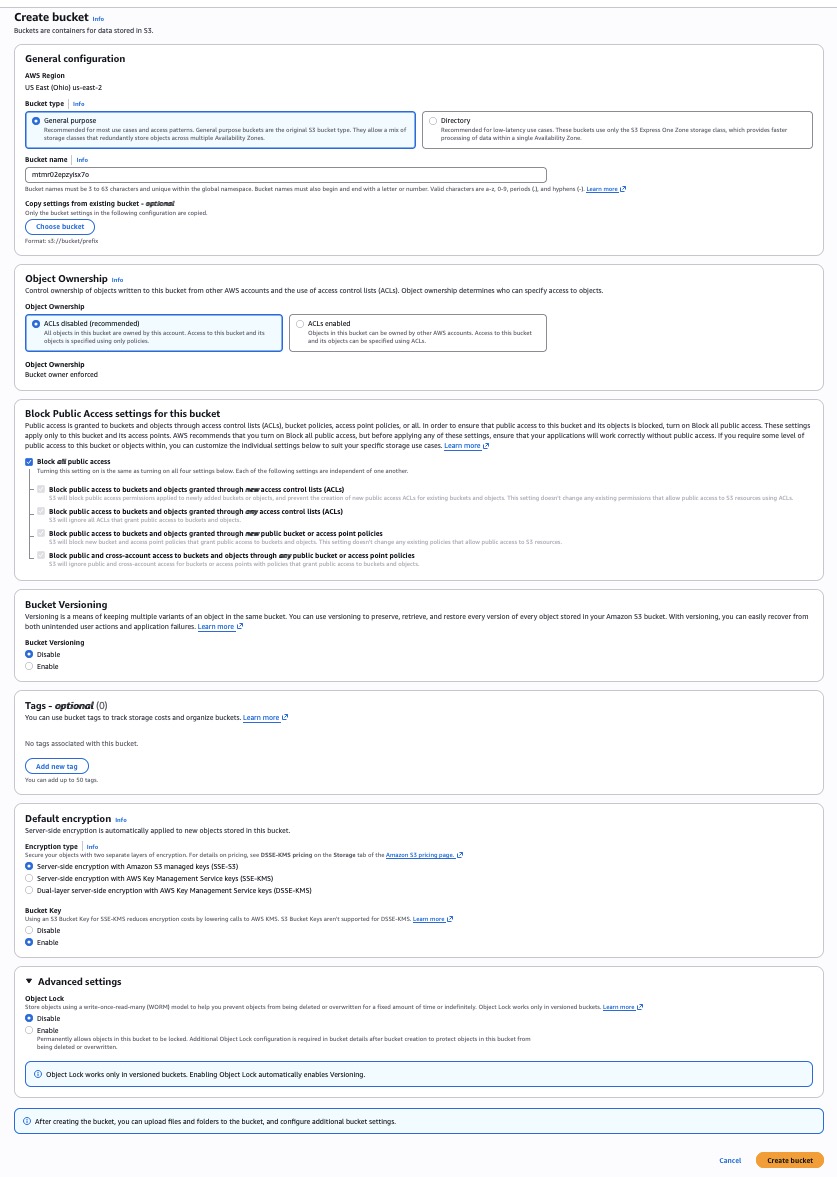

Create the S3 bucket

- Open AWS Console → S3 → Create bucket: https://aws.amazon.com/pm/serv-s3/

- Pick a globally unique name (example):

pcibex-recordings-demo-2025. Prefer an unpredictable name (I generate a random string with @bash-random).

s=$(curl -s "https://www.random.org/strings/?num=1&len=15&digits=on&upperalpha=off&loweralpha=on&unique=on&format=plain&rnd=new"); echo "$s"; printf "%s" "$s" | pbcopy- Choose the same Region you’ll use for Lambda (e.g.,

us-east-2).

- Keep Block all public access enabled.

- (Optional) Turn on Default encryption.

You don’t need anything fancy here. We’ll pass the bucket name to the Lambda via environment variables. Screenshot of my settings:

General configuration

• AWS Region: where the bucket lives (e.g.,us-east-2).

• Bucket type: General purpose (the default).

• Bucket name: globally unique and DNS-friendly.Object Ownership

• ACLs disabled (recommended): use IAM policies; simplest.

• ACLs enabled: legacy; only if you need object-level ACLs.Block Public Access

• Keep ON unless you purposely serve public assets.Bucket Versioning

• Disable: only latest copy kept.

• Enable: keeps old versions; costs more.Tags (optional)

• Key–value labels (e.g.,project=pcibex,env=prod).Default encryption

• Enable: S3 encrypts objects at rest (SSE-S3 or SSE-KMS).Advanced → Object Lock

• WORM-style retention; only if you need compliance features.

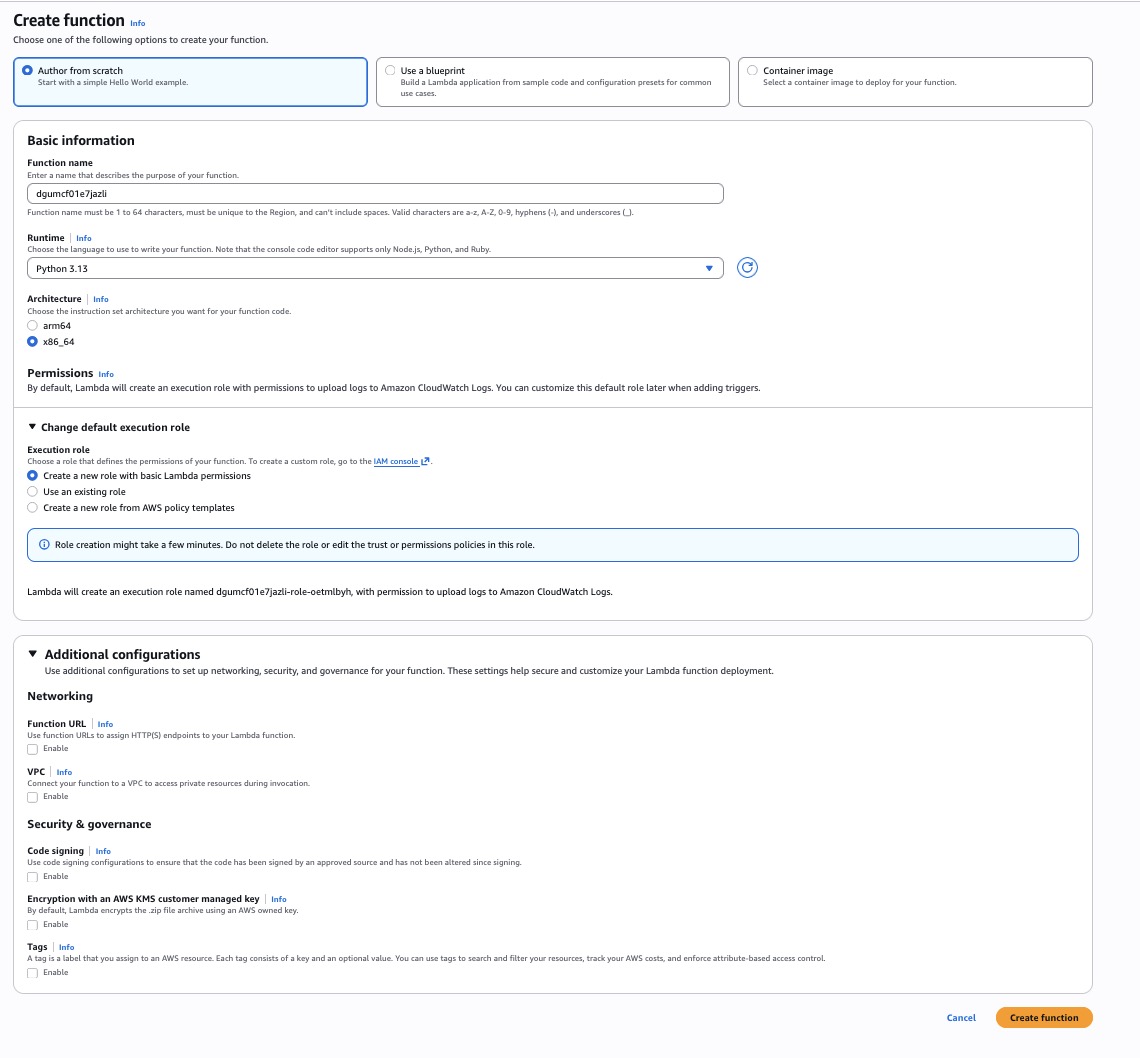

Create the Lambda (Python)

From the AWS search bar, open Lambda (https://aws.amazon.com/lambda).

- Create function → Author from scratch.

- Name:

pcibex-s3-recorder(random suffixes are fine).

- Runtime: Python 3.13 (or the latest available later).

- Permissions: create a new role with basic Lambda permissions.

Lambda creation screenshot:

- Create method: Author from scratch vs blueprint vs container image.

- Basic info: function name; runtime (Python 3.13 here); architecture.

- Permissions: execution role (lets Lambda write logs, etc.).

- Additional config: Function URL (not needed here), VPC (skip unless needed), code signing, KMS encryption, tags.

Click Create function. AWS creates the runtime + role and drops you into the editor.

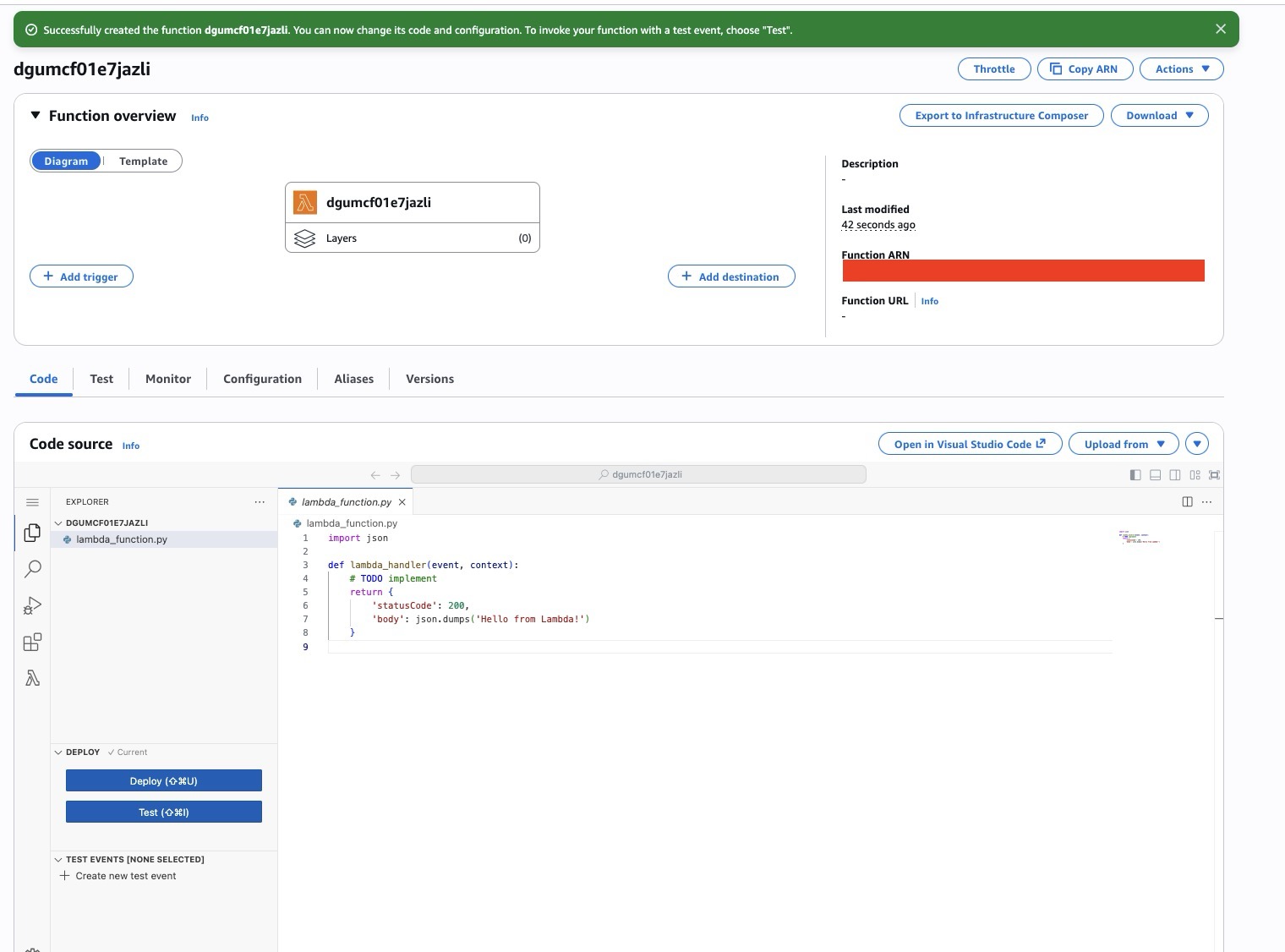

You’ll land on the function page with a code editor. Screenshot:

Add the handler and environment

Scroll to the inline editor. Replace the default code with the code below in @python-lambda that handles writing zip files to S3. Then:

- Set Environment variables:

BUCKET_NAME= your S3 bucket name from “Create the S3 bucket” stepALLOWED_ORIGIN=https://farm.pcibex.net(or your PCIbex origin if you are using your own server)

- Click Deploy.

import os

import json

import base64

import uuid

import boto3

import logging

# basic logger for CloudWatch

logger = logging.getLogger()

logger.setLevel(logging.INFO)

# s3 client

s3 = boto3.client("s3")

# environment-configurable values

BUCKET_NAME = os.environ.get("BUCKET_NAME", "pcibex-recordings-demo-2025")

ALLOWED_ORIGIN = os.environ.get("ALLOWED_ORIGIN", "https://farm.pcibex.net")

# experiment identifier; can also be set via env var

EXP_ID = os.environ.get("EXP_ID", "utku_diss") # e.g., "utku_diss", "exp1", etc.

def _cors_headers():

return {

"Access-Control-Allow-Origin": ALLOWED_ORIGIN,

"Access-Control-Allow-Credentials": "true",

"Access-Control-Allow-Methods": "OPTIONS,GET,POST",

"Access-Control-Allow-Headers": "content-type",

}

def _store_in_s3(content: bytes, filename: str) -> str:

# give each upload a unique key: EXP_ID_<uuid>_<filename>

prefix = f"{EXP_ID}_" if EXP_ID else ""

key = f"{prefix}{uuid.uuid4()}_{filename}"

s3.put_object(

Bucket=BUCKET_NAME,

Key=key,

Body=content,

ContentType="application/zip",

)

return key

def _parse_multipart(event):

"""Parse a simple multipart/form-data upload from API Gateway HTTP API.

PCIbex sends the file part under the name "file".

"""

headers = {k.lower(): v for k, v in (event.get("headers") or {}).items()}

ctype = headers.get("content-type")

if not ctype or "multipart/form-data" not in ctype:

raise ValueError("Expected multipart/form-data")

# extract boundary

boundary = ctype.split("boundary=")[1].strip().strip('"').encode()

# body comes base64-encoded from API Gateway for binary/multipart

body = event.get("body", "")

if event.get("isBase64Encoded"):

body = base64.b64decode(body)

else:

body = body.encode()

delimiter = b"--" + boundary

sections = body.split(delimiter)

file_bytes = None

filename = "recordings.zip"

for sec in sections:

if not sec or sec in (b"--", b"--\r\n"):

continue

head, _, data = sec.partition(b"\r\n\r\n")

if not data:

continue

# trim trailing CRLF and optional --

data = data.rstrip(b"\r\n")

if data.endswith(b"--"):

data = data[:-2]

head_text = head.decode(errors="ignore")

if 'name="file"' in head_text:

# get filename if present

for line in head_text.split("\r\n"):

if "filename=" in line:

filename = line.split("filename=", 1)[1].strip().strip('"')

file_bytes = data

break

if file_bytes is None:

raise ValueError("No file part named 'file' found")

return filename, file_bytes

def lambda_handler(event, context):

# detect HTTP method from API Gateway v2 (HTTP API)

method = event.get("requestContext", {}).get("http", {}).get("method", "GET")

logger.info(f"method={method}")

# 1. handle CORS preflight

if method == "OPTIONS":

return {

"statusCode": 200,

"headers": _cors_headers(),

"body": ""

}

# 2. PCIbex calls the URL once at start with GET

if method == "GET":

return {

"statusCode": 200,

"headers": _cors_headers(),

"body": json.dumps({"ok": True})

}

# 3. actual upload: POST multipart/form-data

if method == "POST":

try:

filename, data = _parse_multipart(event)

key = _store_in_s3(data, filename)

return {

"statusCode": 200,

"headers": _cors_headers(),

"body": json.dumps({"ok": True, "key": key})

}

except Exception as e:

logger.exception("Upload failed")

return {

"statusCode": 400,

"headers": _cors_headers(),

"body": json.dumps({"ok": False, "error": str(e)})

}

# anything else: not allowed

return {

"statusCode": 405,

"headers": _cors_headers(),

"body": json.dumps({"error": "Method not allowed"})

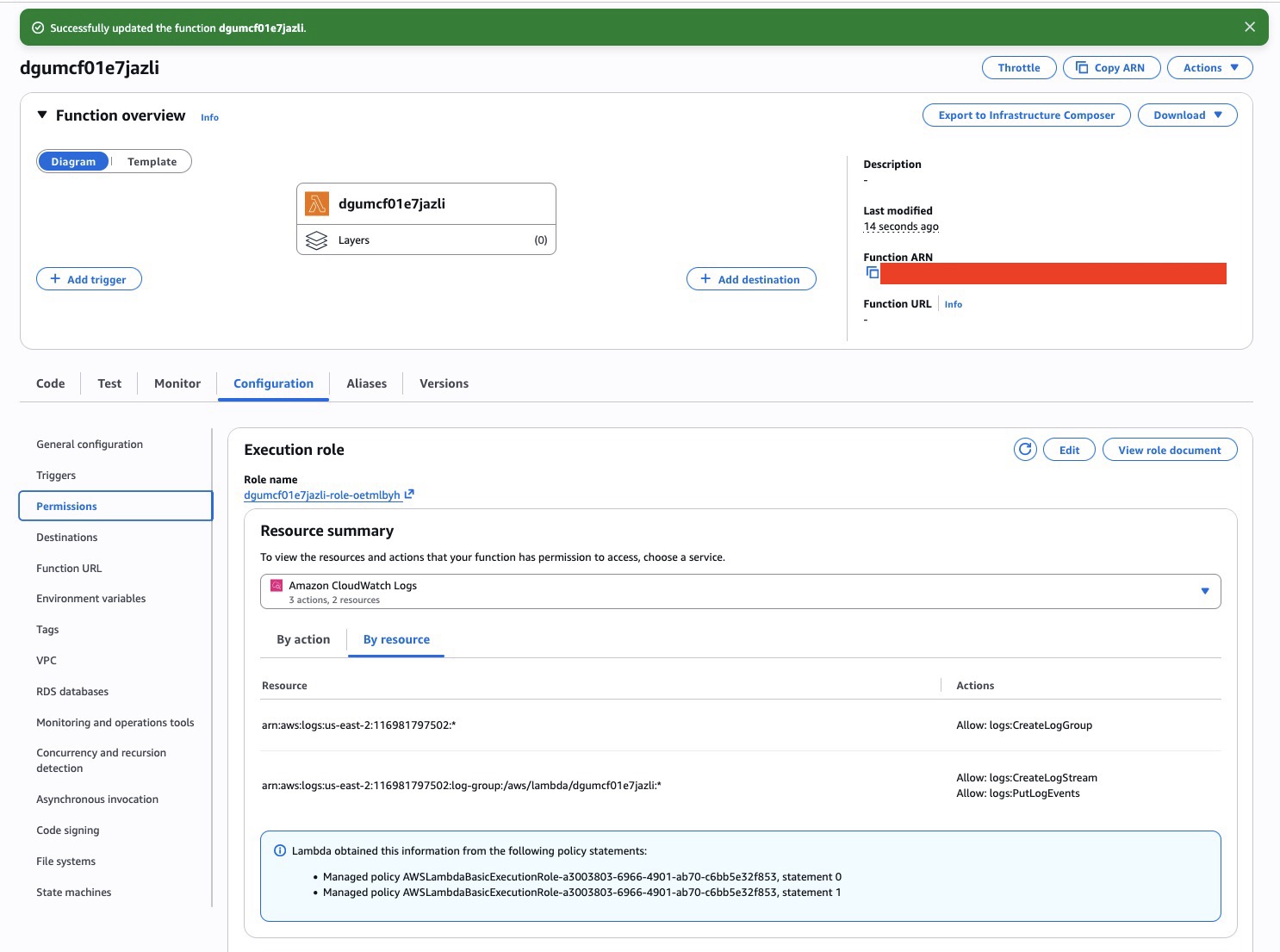

}Give the Lambda S3 write permissions (IAM)

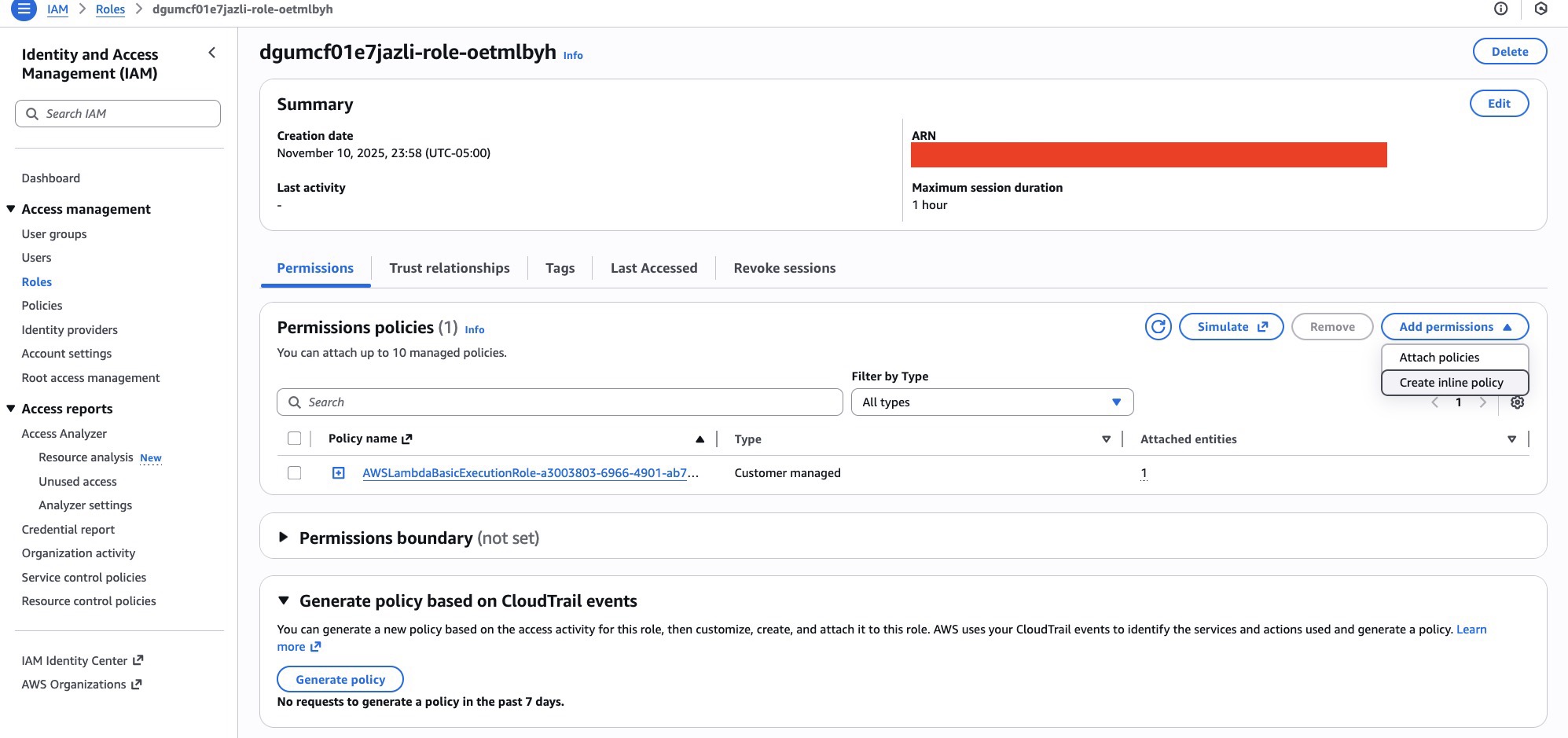

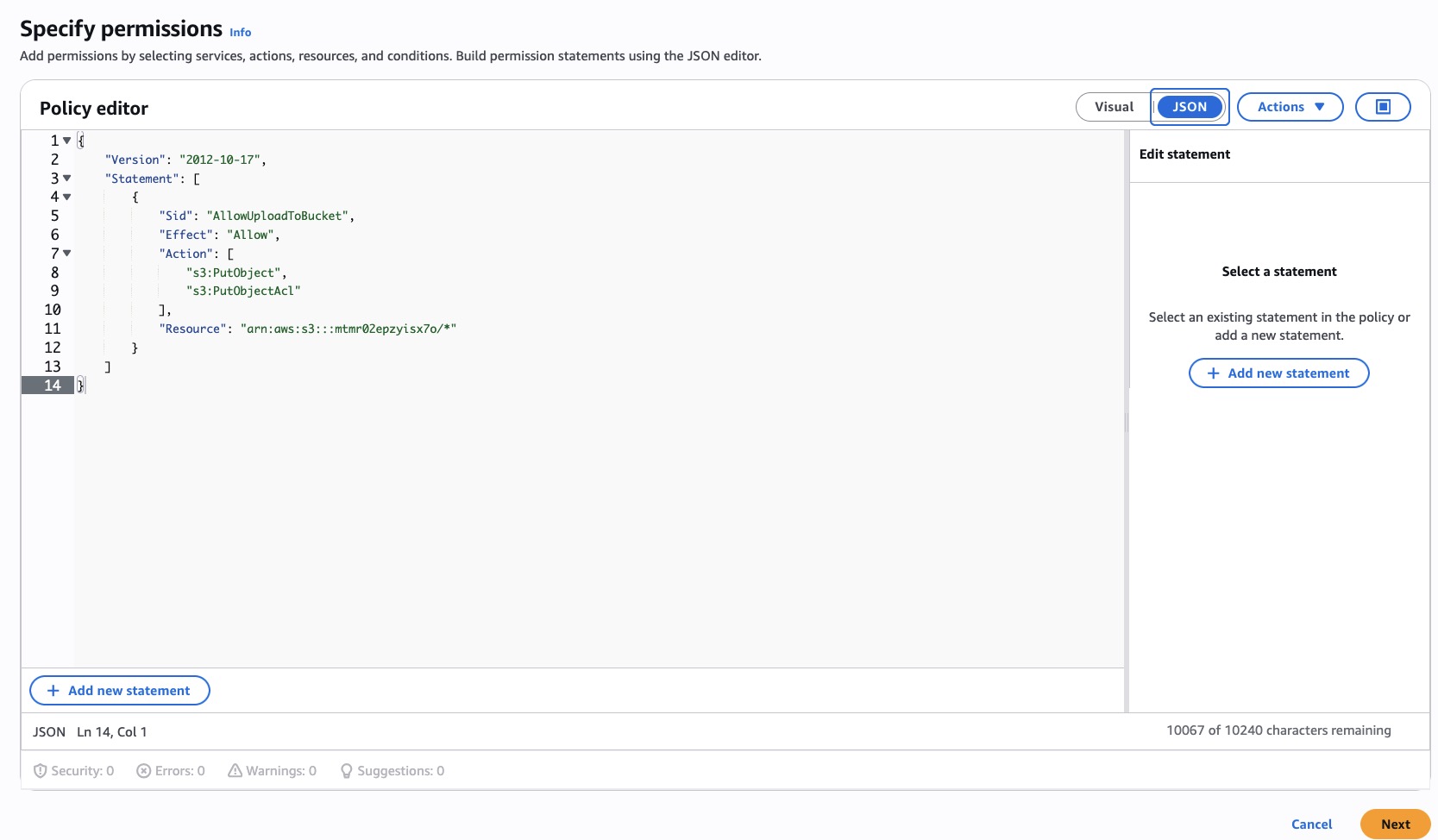

Back on the Lambda page: Configuration → Permissions → Role name (click it to open IAM).

- Add permissions → Create inline policy → JSON editor.

- Allow

s3:PutObject(ands3:PutObjectAclif you need it) on your bucket’s objects by pasting the code in @json-iam.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowUploadToBucket",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": "arn:aws:s3:::pcibex-recordings-demo-2025/*"

}

]

}Click Next, give it a name, Create policy.

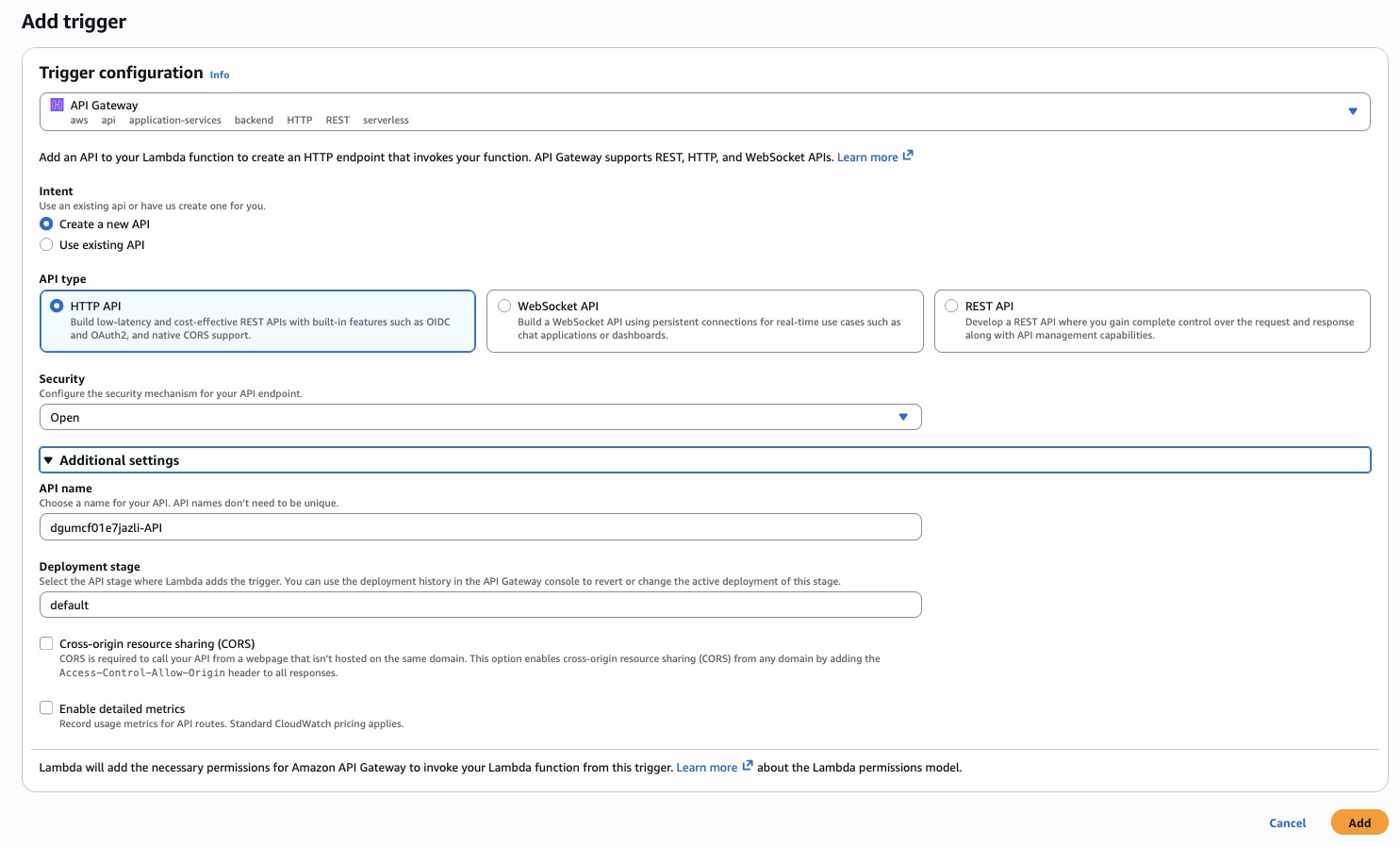

Expose the Lambda over HTTP (API Gateway)

Return to your Lambda page and click Add trigger.

- Source: API Gateway → Create an API.

- HTTP API (not REST).

- Security: Open (you can restrict later).

- Create.

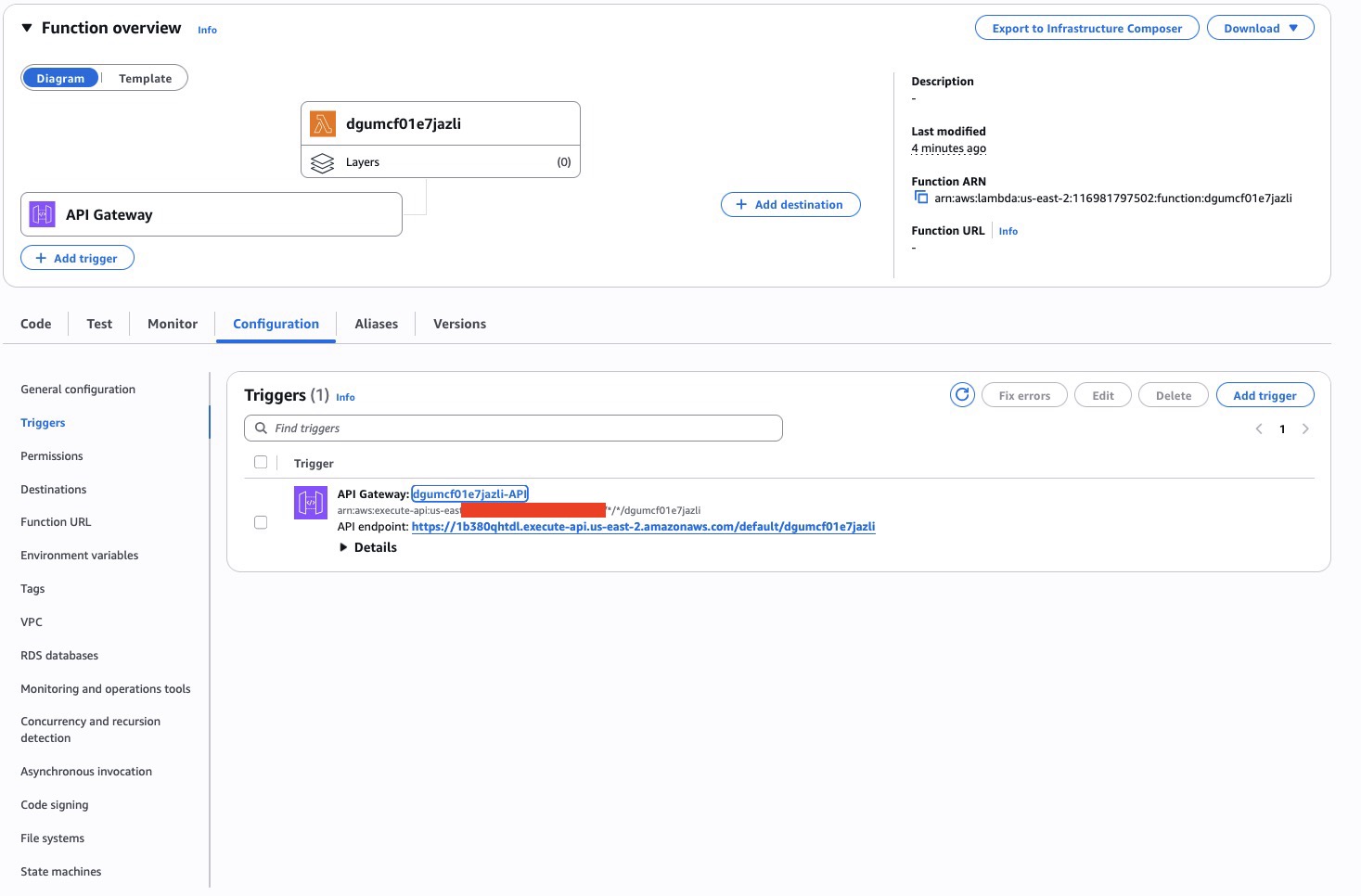

You should now see an API Gateway trigger box on the Lambda page. Open it to find the Invoke URL; this is the URL PCIbex will call.

Example format:

https://abc123.execute-api.us-east-2.amazonaws.com/default/pcibex-s3-recorderThere’s also a link into the API Gateway console (we’ll use it for CORS next). Screenshot of the Lambda page with trigger:

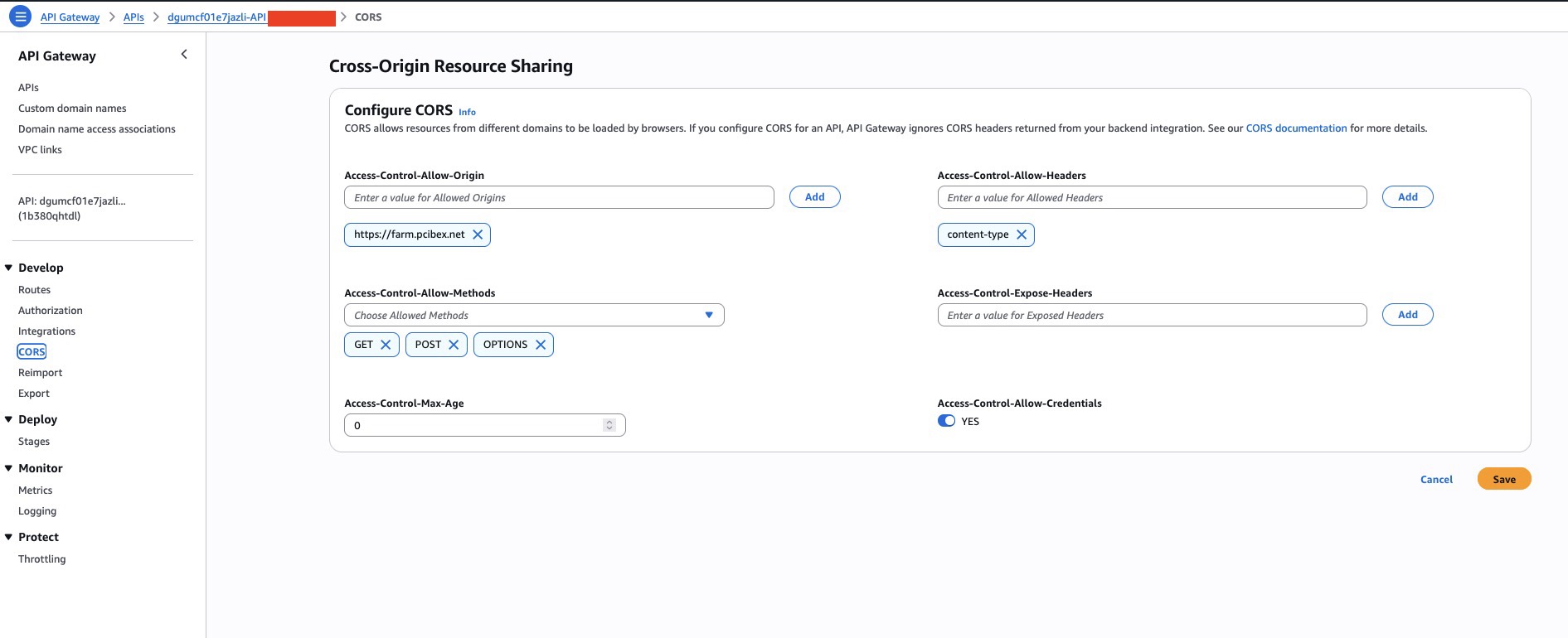

Enable CORS in API Gateway

In the API Gateway console for your HTTP API:

- Go to CORS.

- Access-Control-Allow-Origin:

https://farm.pcibex.net

- Access-Control-Allow-Methods:

GET, POST, OPTIONS

- Access-Control-Allow-Headers:

content-type

- Access-Control-Allow-Credentials: On

- Save (HTTP APIs usually auto-deploy these changes).

Your Lambda also returns matching CORS headers, so the browser will accept the responses.

Test the endpoint

Before wiring PCIbex, sanity-check the GET route:

- Visit your API URL directly (API Endpoint in the Triggers tab) in a browser.

- You should see:

{"ok": true}If not, open CloudWatch Logs for the function and look for errors.

Wire up PCIbex / PennController

The original S3 tutorial includes a sample experiment; here’s a copy you can start from:

Experiment editor link: (click here to open the sample experiment)[https://farm.pcibex.net/experiments/new?from=OAHoDO]

In that template, replace:

InitiateRecorder("https://my.server/path/to/file.php").label("init")

with:

const LAMBDA_URL = "https://abc123.execute-api.us-east-2.amazonaws.com/default/pcibex-s3-recorder";

InitiateRecorder(LAMBDA_URL).label("init");Now PCIbex will GET the Lambda at init (expecting { "ok": true }) and later POST the ZIP with UploadRecordings(...).

Async vs end-of-experiment uploads

The template uploads asynchronously after each trial via:

// Run the 'letter'- and 'picture'-labeled trials (see Template below) in a randomized order,

// and insert the 'async'-labeled trial (see UploadRecordings below) between each trial

sepWith("async", rshuffle("letter","picture"))You can keep that pattern, or upload once at the end with a single UploadRecordings() in your sequence. The end-of-experiment approach allows participants to download their ZIP if the server is unreachable; the fully async pattern does not.

What happens under the hood

InitiateRecorder(...)makes a GET → Lambda returns{ "ok": true }.

UploadRecordings(...)sends files with POST multipart/form-data →

- Lambda parses the upload and stores it in S3 → responds

{ "ok": true, "key": "..." }.

- No extra JS is needed.

Verify uploads

After a test run, you should see keys like:

4f4c3a71-6ad4-4aad-9d2a-f932b261a0a5_recordings.zipIf uploads fail or you don’t see new objects, open CloudWatch Logs for the Lambda and look for method=POST entries and any stack traces.

Notes and variations

- Change

ContentTypeif you know PCIbex uploads a different type.

- Use “subfolders” by prefixing the object key, e.g.,

f"pcibex/{uuid}_{filename}".

- If you host PCIbex elsewhere, update both

ALLOWED_ORIGINand API Gateway CORS.

- Add experiment/version info to keys for traceability (e.g.,

exp1-v3/...).

- You can host stimuli out of S3, but I usually ship a ZIP from Git for simplicity:

PreloadZip("https://raw.githubusercontent.com/utkuturk/silly_exp/main/my_pictures.zip");As you get comfortable, consider recreating this setup via the AWS CLI; it’s faster and more reproducible than clicking around the console. I am not there yet. When I streamline this process with a commandline tools, I will share that as well.